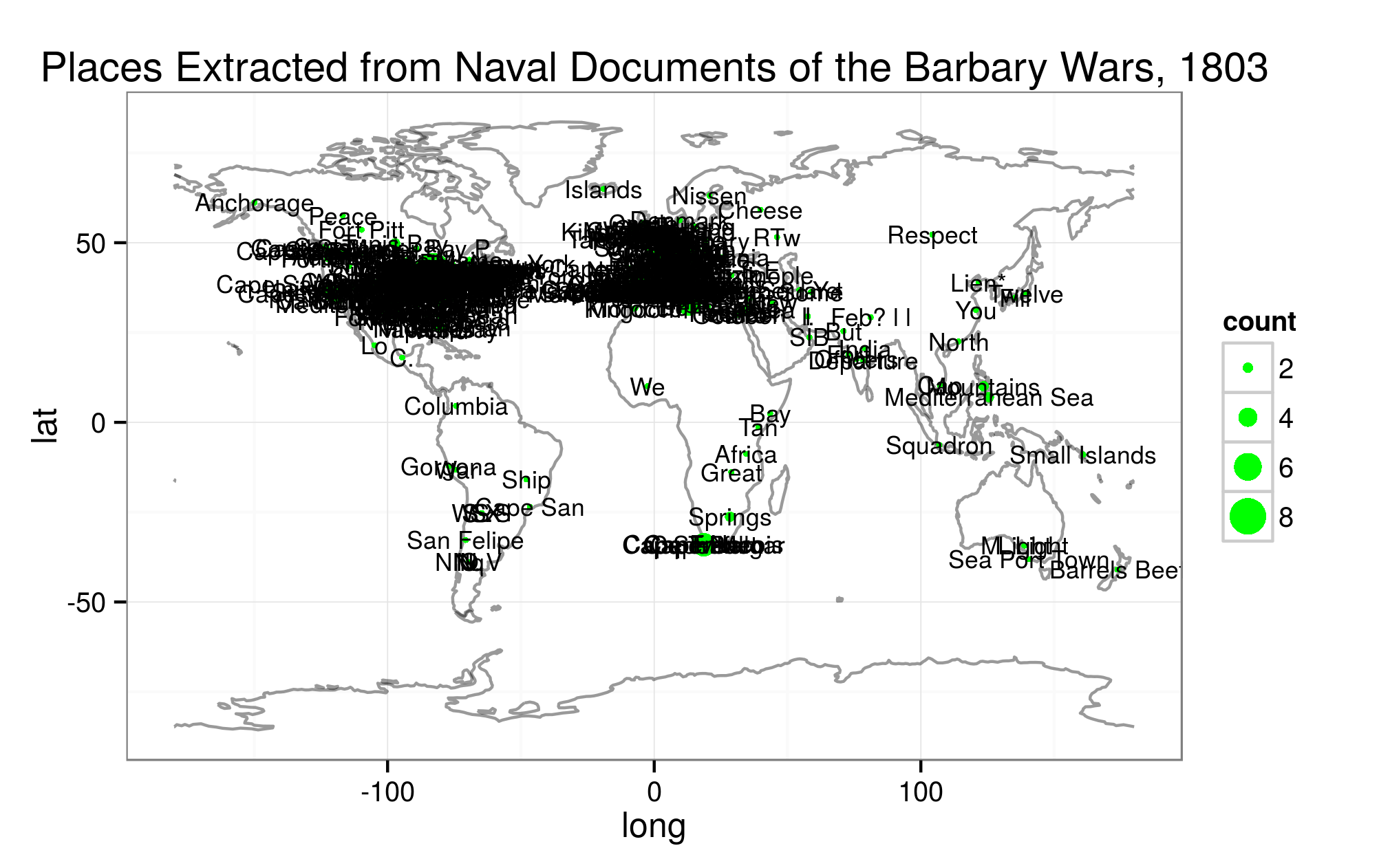

This past week in my Humanities Data Analysis class, we looked at mapping as data. We explored ggplot2’s map functions, as well as doing some work with ggmap’s geocoding and other things. One thing that we just barely explored was automatically extracting place names through named entity recognition. It is possible to do named entity recognition in R, though people say it’s probably not the best way. But in order to stay in R, I used a handy tutorial by the esteemed Lincoln Mullen, found here.

I was interested in extracting place names from the data I’ve been cleaning up for use in a Bookworm, the text of the 6-volume document collection, Naval Documents Related to the United States Wars with the Barbary Powers, published in the 1920s by the U.S. government. It’s a great primary source collection, and a good jumping-off point for any research into the Barbary Wars. The entire collection has been digitized by the American Naval Records Society, with OCR, but the OCRed text is not clean. The poor quality of the OCR has been problematic for almost all data analysis, and this extraction was no exception.

Read More: Named Entity Extraction: Productive Failure? | Abby Mullen.