JISC has issued a call for Digital Infrastructure proposals.

Last week I presented at the Great Lakes College Association’s New Directions workshop on digital humanities (DH), where I tried to answer the question “Why the digital humanities?” But I discovered that an equally important question is “How do you do the digital humanities”? Although participants seemed to be excited about the potential of digital humanities, some weren’t sure how to get started and where to go for support and training.

Building on the slides I presented at the workshop, I’d like to offer some ideas for how a newcomer might get acquainted with the community and dive into DH work.

In concert with the senior leadership at SU, the Director will also be involved in guiding projects that reflect SU’s emphasis on Scholarship in Action, including ongoing initiatives on the digital humanities and the public role of art, technology, and design (http://syr.edu/about/vision.html). The Director must be an active participant in national debates and organizational work on the public significance of humanities, arts, and design, and have a vigorous scholarly and writing agenda that explores and exemplifies the public dimensions of scholarly and creative work.

(The following talk was given for the 6th Annual Nebraska Digital Workshop.)

I’m going to talk this afternoon about a central paradox of doing digital humanities–what Jerome Mcgann, one of the leading scholars of electronic texts, calls the problem of imagining what you don’t know.

In Digital Humanities, what we think we will build and what we build are often quite different, and unexpectedly so. It’s this radical disjuncture that offers us both opportunities and challenges…. What we really are asking today is how does scholarly practice change with digital humanities? Or how do we do humanities in the digital age?

[It] wasn’t until the advent of Big Data in the 2000s and the rebranding of Humanities Computing as the “Digital Humanities” that it became the subject of moral panic in the broader humanities.

The literature of this moral panic is an interesting cultural phenomenon that deserves closer study…. We can use the methods of the Digital Humanities to characterise and evaluate this literature. Doing so will create a test of the Digital Humanities that has bearing on the very claims against them by critics from the broader humanities that this literature contains. I propose a very specific approach to this evaluation.

[This paper was presented at TEI Memebers’ Meeting]

In the past years two complementary but somewhat diverging tendencies have dominated the field of digital philology: the creation of models for analysis and encoding, such as the TEI, and the creation of tools or software to support the creation of digital editions for editing, publishing or both (Robinson 2005, Bozzi 2006).

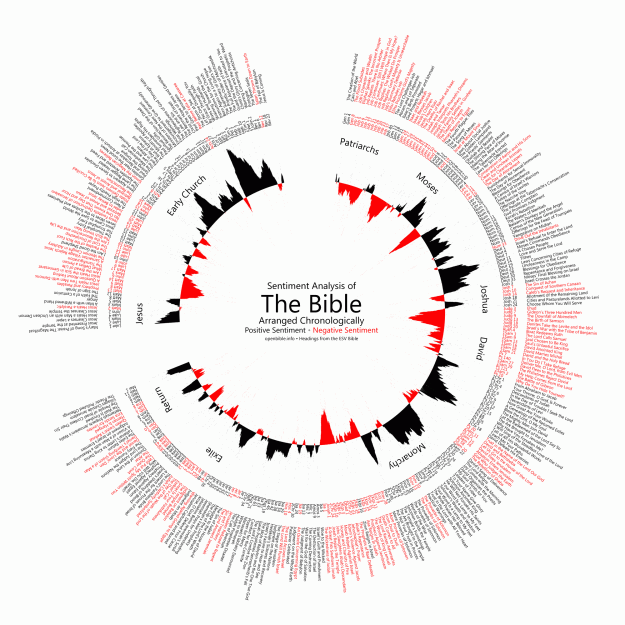

This visualization explores the ups and downs of the Bible narrative, using sentiment analysis to quantify when positive and negative events are happening:

Methodology

Sentiment analysis involves algorithmically determining if a piece of text is positive (“I like cheese”) or negative (“I hate cheese”). Think of it as Kurt Vonnegut’s story shapes backed by quantitative data.

I ran the Viralheat Sentiment API over several Bible translations to produce a composite sentiment average for each verse. Strictly speaking, the Viralheat API only returns a probability that the given text is positive or negative, not the intensity of the sentiment. For this purpose, however, probability works as a decent proxy for intensity.

Right now Latent Semantic Analysis is the analytical tool I’m finding most useful. By measuring the strength of association between words or groups of words, LSA allows a literary historian to map themes, discourses, and varieties of diction in a given period. This approach, more than any other I’ve tried, turns up leads that are useful for me as a literary scholar. But when I talk to other people in digital humanities, I rarely hear enthusiasm for it. Why doesn’t LSA get more love? I see three reasons.

The TEI Special Interest Group on Libraries has released version three of the Best Practices for TEI in Libraries: A Guide for Mass Digitization, Automated Workflows, and Promotion of Interoperability with XML Using the TEI.

The Social Media Research Foundation is pleased to announce the immediate availability of ThreadMill 0.1. ThreadMill is a free and open application that consumes message thread data and produces reports about each author, thread, forum, and board along with visualizations of the patterns of connection and activity. ThreadMill is written in Ruby, and depends on MongoDB, SinatraRB, HAML, and Flash to collect, analyze, and report data about collections of conversation threads.