Matthew Lincoln recently put up a Twitter bot that walks through chains of historical artwork by vector space similarity. https://twitter.com/matthewdlincoln/status/1003690836150792192.

The idea comes from a Google project looking at paths that traverse similar paintings.

This reminds that I’d meaning for a while to do something similar with words in an embedding space. Word embeddings and image embeddings are, more or less, equivalent; so the same sorts of methods will work on both. There are–and will continue to be!–lots of interesting ways to bring strategies from convoluational image representations to language models, and vice versa. At first I though I could just drop Lincoln’s code onto a word2vec model, but the paths it finds tend to oscillate around in the high dimensional space more than I’d like. So instead I coded up a new, divide and conquer strategy using the Google News corpus. Here’s how it works.

1. Take any two words. I used “duck” and “soup” for my testing.

2. Find a word that is, in cosine distance, *between* the two words: that is, that is closer to both of them than either is to each other. Select for one as close to the midpoint as possible.* With “duck” and “soup,” that word turns out to be “chicken”: it’s a bird, but it’s also something that frequently shows up in the same context as soup.

3. Repeat the process to find words between “duck” and “chicken.” That, in this corpus, turns out to be “quail.” The vector here seems to be similar to the one above–quail is food relatively more often than duck, but less overwhelmingly than chicken.

4. Continue subdividing each path until no more intermediaries exist. For example, “turkey” works as a point between “quail” and “chicken”; but nothing intermediates between turkey and quail, or between turkey and chicken.

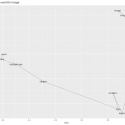

The overall path then sketches out an arc between the two words. (The shape of the arc itself is a component of PCA, but it’s also a useful reminder that the choice of the first pivot is quite important–it sets the entire region for the rest of the search.

Read the original post here.